In a groundbreaking advancement for artificial intelligence and healthcare, OpenAI’s latest model, known as o1-preview, has demonstrated superhuman capabilities in medical diagnosis and emergency case evaluations, according to a major study published this week. The AI model, which is the foundation of OpenAI’s o1 system, significantly outperformed both its predecessor GPT-4 and experienced human doctors in a wide range of clinical tasks, suggesting a seismic shift in the future of medical decision-making.

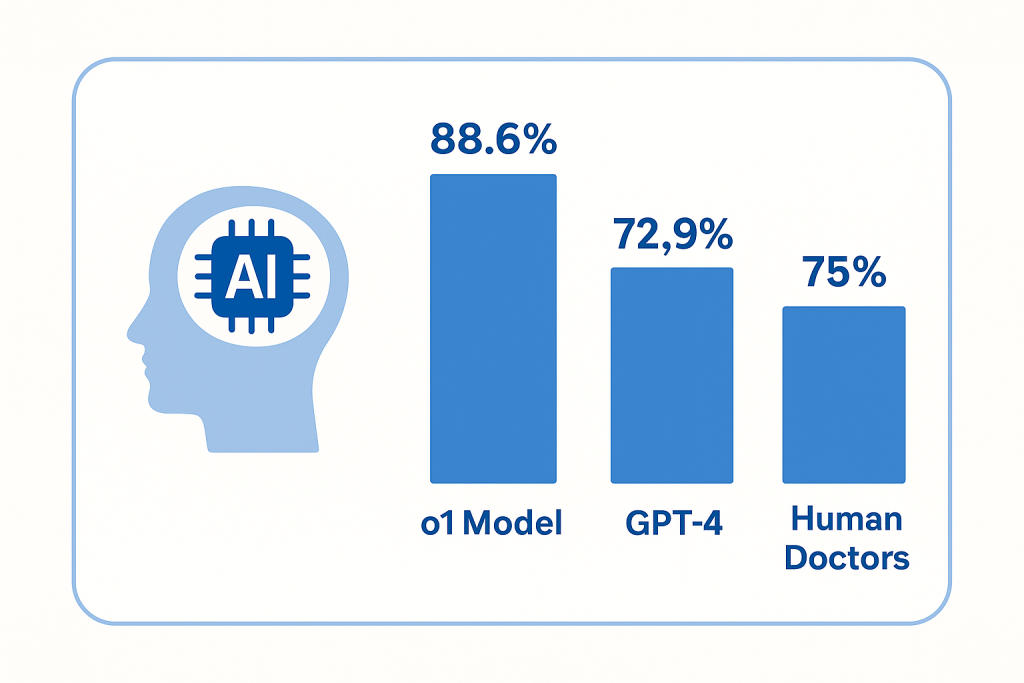

The study, led by researchers from top academic institutions in collaboration with OpenAI, benchmarked o1 against both generalist and specialist physicians in diagnosing complex clinical cases. The results were striking: the o1 model achieved an overall accuracy of 88.6% in diagnostic tasks, outperforming GPT-4, which scored 72.9%, and exceeding the average performance of practicing physicians.

Perhaps most notably, o1 scored correctly on 78 out of 80 clinical cases sourced from the New England Journal of Medicine (NEJM), a gold standard in medical diagnostics. These cases, often used to challenge even the most skilled clinicians, include intricate patient histories, ambiguous symptoms, and scenarios requiring sophisticated differential diagnosis — the art of distinguishing between two or more conditions that share similar signs or symptoms.

Outperforming the Experts

The study assessed the o1 model across various dimensions of clinical reasoning. Beyond raw diagnostic accuracy, it was evaluated on its performance in differential diagnosis (the ability to consider and rank multiple possible causes of a patient’s condition), test-selection strategy, and management decisions — such as recommending further investigations or therapeutic steps.

In test-selection tasks, o1 significantly outperformed both human doctors and GPT-4. This measure assesses how well a clinician can decide which diagnostic tests are most appropriate, balancing the need for thorough investigation with the cost, invasiveness, and timeliness of each test. The AI’s proficiency in this area suggests a deep understanding of clinical workflows and decision trees, echoing the expertise of seasoned practitioners.

In terms of management decisions, o1 again surpassed all benchmarks, indicating a well-rounded capability not just to diagnose, but to suggest evidence-based, safe, and effective patient care strategies.

Researchers also evaluated the model’s performance in medical vignettes simulating real-world scenarios seen in emergency departments. These involved interpreting patient complaints, vital signs, lab results, and imaging reports to arrive at accurate diagnoses and decisions under pressure. The model’s ability to parse through complex and often ambiguous data and arrive at clinically sound conclusions highlights its potential utility in high-stakes, time-sensitive environments such as ERs.

Strengths and Limitations

What sets the o1 model apart is its advanced clinical reasoning engine. Unlike earlier models that could regurgitate medical facts or mimic step-by-step logic without genuine understanding, o1 appears capable of synthesizing large volumes of data — patient history, symptoms, and test results — and applying nuanced clinical judgment.

The model’s ability to offer concise, relevant, and actionable insights could make it an invaluable assistant in the hands of overburdened healthcare workers. It could help reduce diagnostic errors, speed up decision-making, and ensure more consistent adherence to evidence-based guidelines.

However, despite its impressive performance, o1 is not without limitations. The study found that the model showed no significant improvement over GPT-4 in tasks involving probabilistic reasoning — such as estimating the likelihood of rare diseases — or in triage scenarios, where determining the urgency and priority of cases is critical.

These shortcomings are not insignificant. Triage, in particular, is a key component of emergency medicine and disaster response. Over- or underestimating the severity of a condition can lead to dangerous delays or misallocation of resources. As such, the researchers caution against viewing o1 as a standalone solution for all medical tasks.

Ethical and Practical Implications

The study’s findings come amid growing interest in the role AI can play in augmenting — or even one day replacing — certain functions traditionally performed by physicians. From radiology and pathology to primary care and emergency medicine, AI is being trained to recognize patterns, analyze test results, and suggest treatments. However, the integration of AI into real-world clinical settings is fraught with ethical, legal, and regulatory challenges.

Key among these is the question of responsibility. If an AI model makes a recommendation that leads to a poor patient outcome, who is liable — the doctor, the hospital, or the developers of the model? Further concerns center on algorithmic bias, data privacy, and the need for transparency in how these models reach their conclusions.

To address these concerns, the researchers behind the o1 study emphasized the importance of clinical trials in real-world settings before deploying the model in hospitals or clinics. While retrospective case evaluations and simulations can offer strong insights, only prospective studies involving real patients can truly validate the safety and efficacy of the system.

Moreover, researchers urge that AI should be viewed as a complement to, rather than a replacement for, human expertise. In settings where doctors are overwhelmed, under-resourced, or stretched too thin — particularly in developing nations or during public health emergencies — AI could offer much-needed support. But critical thinking, empathy, and human judgment remain essential components of good medicine.

A Glimpse into the Future of Healthcare

This development adds to a growing body of evidence that large language models are beginning to cross the threshold from general-purpose tools into highly specialized domains.

OpenAI’s o1-preview model, initially developed as a general reasoning system, has now proven itself capable of excelling in one of the most complex and sensitive fields: medicine. For healthcare systems around the world, the implications are profound. In resource-poor settings, where access to skilled clinicians is limited, AI tools like o1 could help close the care gap. In high-resource hospitals, they could reduce cognitive load on physicians, cut down on burnout, and improve patient outcomes through second-opinion diagnostics.

The next frontier will involve embedding these models into existing healthcare infrastructure — from electronic health records and decision-support systems to telemedicine platforms and diagnostic kiosks. Training the model further on local population data, languages, and healthcare practices will be essential for global relevance.

As artificial intelligence continues to evolve, OpenAI’s o1 model represents a powerful example of what’s possible when cutting-edge technology meets the pressing needs of modern healthcare.

The vision of an AI-augmented health system, where machines and doctors work side by side to deliver faster, safer, and more accurate care, is no longer science fiction — it’s on the horizon.