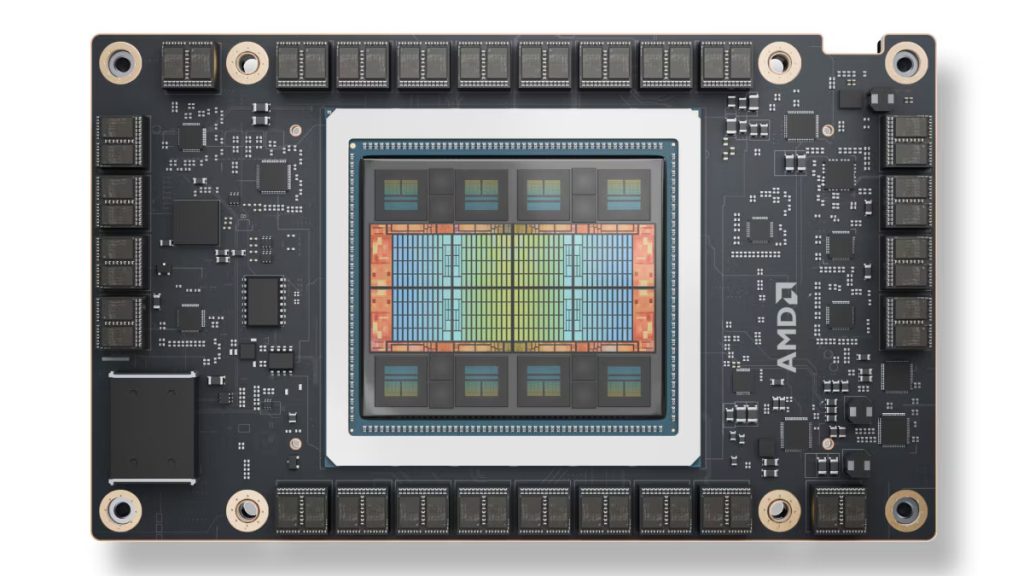

AMD has announced the launch of its latest AI accelerator chip, the MI325X, during an event in San Francisco on Thursday. Set to roll out to data center customers in the fourth quarter of this year, the MI325X is positioned as a direct competitor to Nvidia’s H200 GPUs, which dominate the AI processing market.

At the event, AMD emphasized the MI325X’s “industry-leading” performance, highlighting its potential to narrow the competitive gap with Nvidia. The company also revealed plans for its next-generation MI350 chip, which is slated for release in the second half of 2025 and will take on Nvidia’s upcoming Blackwell system.

Lisa Su, AMD’s CEO, expressed her ambition for the company to emerge as the “end-to-end” leader in the AI space over the next decade. “This is the beginning, not the end of the AI race,” she stated in an interview with the Financial Times.

The MI325X boasts an impressive architecture, featuring 153 billion transistors built on TSMC’s 5 nm and 6 nm FinFET processes. It contains 19,456 stream processors and 1,216 matrix cores across 304 compute units, with a peak engine clock speed of 2100 MHz. The chip delivers up to 2.61 PFLOPs of peak eight-bit precision (FP8) performance and reaches 1.3 PFLOPs for half-precision (FP16) operations.

The announcement comes as Nvidia prepares to deploy its Blackwell chips this quarter. Nvidia has become a leader in the AI chip market, projected to achieve $26.3 billion in AI data center chip sales for the quarter ending in July. In contrast, AMD anticipates $4.5 billion in AI chip sales for 2024, highlighting the significant disparity in market share.

AMD has secured Microsoft and Meta as customers for its MI300 AI GPUs, with potential interest from Amazon. The new MI325X aims to capitalize on the growing demand for AI chips driven by applications like OpenAI’s ChatGPT, which require extensive data center resources.

“AI demand has actually continued to take off and actually exceed expectations,” Su noted. She underscored that the rapid investment in AI technology reflects a broader trend across various sectors.

While AMD has emerged as a formidable competitor, it still faces challenges in gaining market share. Analysts indicate that Nvidia’s CUDA programming language has become a standard in AI development, effectively locking developers into Nvidia’s ecosystem. To address this, AMD has enhanced its ROCm software, aiming to facilitate a smoother transition for developers to its AI accelerators.

AMD contends that its MI325X chip is particularly effective in content creation and prediction tasks, citing performance advantages over Nvidia’s H200 on specific models. “What you see is that the MI325 platform delivers up to 40% more inference performance than the H200 on Llama 3.1,” Su remarked, referencing Meta’s large language AI model.

In addition to its focus on AI accelerators, AMD continues to develop its central processing unit (CPU) offerings. The company’s data center sales surged to $2.8 billion in the June quarter, with AI chips accounting for about $1 billion. AMD aims to increase its presence in the CPU market, where it currently holds a 34% share, compared to Intel’s dominant position.

On the CPU front, AMD introduced its new EPYC 5th Gen line, designed to enhance performance for data-intensive AI workloads. The new CPUs range from low-cost, low-power options to high-performance 192-core processors aimed at supercomputers, with prices ranging from $527 to $14,813 per chip.

“Today’s AI is really about CPU capability, and you see that in data analytics and a lot of those types of applications,” Su said, emphasizing the synergy between CPUs and GPUs in AI environments.

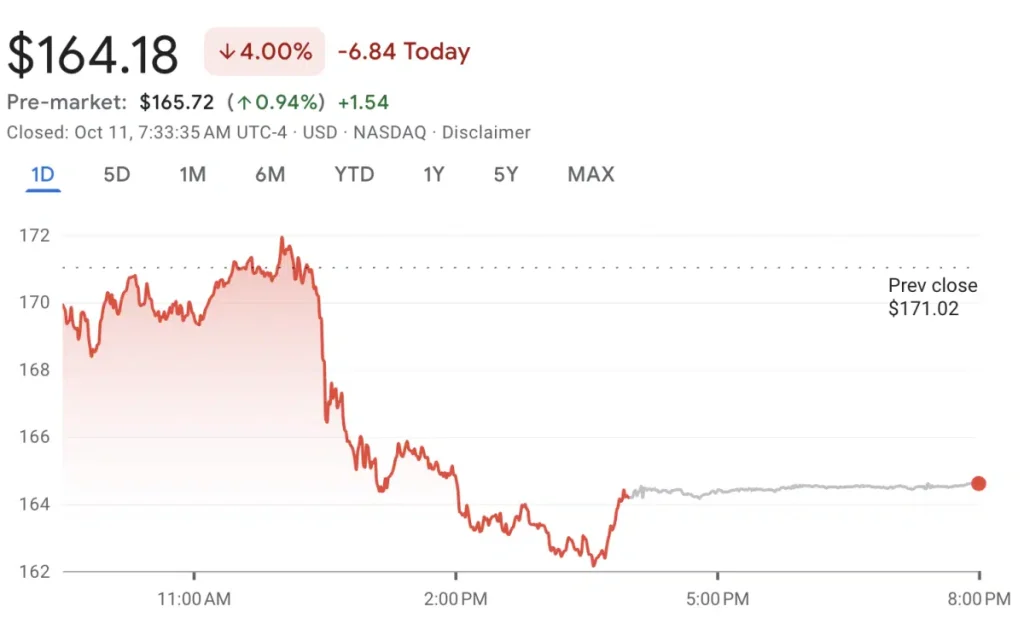

Despite AMD’s optimistic outlook, the market response to the new chip announcement was mixed. AMD’s stock fell by 4% on Thursday, while Nvidia shares rose by about 1%. Analysts attributed the decline to the absence of new major cloud-computing customers for AMD’s latest chips, raising concerns about its ability to penetrate Nvidia’s stronghold in the market.

The competitive landscape is evolving rapidly, with both AMD and Nvidia vying for leadership in the burgeoning AI chip sector. AMD is committed to accelerating its product development cycle, with plans to release new chips annually, including the upcoming MI350 and MI400 models.

As the demand for AI infrastructure grows, AMD is positioning itself as a viable alternative to Nvidia, aiming to capture a significant portion of the projected $400 billion AI chip market by 2027 and $500 billion by 2028. The success of the MI325X could not only bolster AMD’s market position but also attract investor interest amid a rapidly changing technological landscape.

With the AI race heating up, AMD’s advancements in AI chip technology and its strategic partnerships could pave the way for a more competitive future against Nvidia’s established dominance.

Copyright©dhaka.ai

tags: Artificial Intelligence, Ai, Dhaka Ai, Ai In Bangladesh, Ai In Dhaka, Future of AI, Artificial Intelligence in Bangladesh, AMD