Google has officially launched Gemma 2, a new generation of open-source AI models designed to make advanced artificial intelligence more accessible and efficient. Available in 9 billion (9B) and 27 billion (27B) parameter sizes, Gemma 2 represents a significant improvement over its predecessor in terms of performance, efficiency, and safety.

The Gemma initiative, which began earlier this year, aims to democratize AI by providing lightweight, state-of-the-art models derived from the same research and technology behind Google’s Gemini models. The Gemma family has expanded to include specialized variants like CodeGemma, RecurrentGemma, and PaliGemma, each tailored for specific AI tasks.

Gemma 2’s standout feature is its exceptional performance-to-size ratio. The 27B model rivals the capabilities of models more than twice its size, setting a new standard for efficiency in open AI models. This level of performance can be achieved on a single NVIDIA H100 Tensor Core GPU or TPU host, significantly reducing deployment costs and making high-performance AI more accessible to a broader range of users.

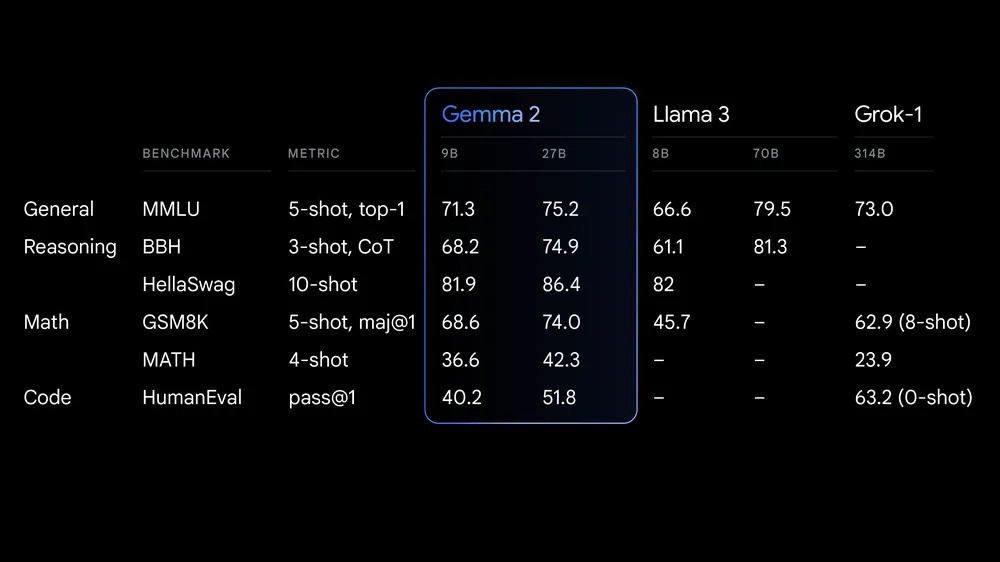

The architecture of Gemma 2 has been redesigned to optimize both performance and inference efficiency. In benchmark tests, the 27B model leads its size class, while the 9B model outperforms competitors like Llama 3 8B. This efficiency translates to cost savings and increased accessibility, as the models can run full-precision inference on a single Google Cloud TPU host, NVIDIA A100 80GB Tensor Core GPU, or NVIDIA H100 Tensor Core GPU.

Gemma 2 is optimized for fast inference across various hardware configurations, from gaming laptops to high-end desktops and cloud-based setups. Users can experience its full capabilities in Google AI Studio, run quantized versions locally using Gemma.cpp, or deploy it on home computers with NVIDIA RTX or GeForce RTX GPUs via Hugging Face Transformers.

Accessibility is a key focus of the Gemma 2 release. The models are available under a commercially-friendly license, allowing developers and researchers to share and commercialize their innovations. Gemma 2 is compatible with major AI frameworks including Hugging Face Transformers, JAX, PyTorch, and TensorFlow via native Keras 3.0. It also works with vLLM, Gemma.cpp, Llama.cpp, and Ollama. The model is optimized with NVIDIA TensorRT-LLM for NVIDIA-accelerated infrastructure, with NVIDIA NeMo integration coming soon.

Google Cloud customers will soon be able to easily deploy and manage Gemma 2 on Vertex AI. To assist developers in building applications and fine-tuning the models, Google has introduced the Gemma Cookbook, which offers practical examples and recipes for various tasks, including retrieval-augmented generation.

Responsible AI development remains a priority for Google. The company provides resources like the Responsible Generative AI Toolkit to help developers build and deploy AI responsibly. The open-sourced LLM Comparator tool, along with its new Python library, allows for in-depth evaluation and comparison of language models. Google is also working on open-sourcing SynthID, its text watermarking technology, for Gemma models.

Google emphasizes its commitment to safety in the development of Gemma 2. The company employs robust internal safety processes, including filtering pre-training data and conducting rigorous testing against comprehensive metrics to identify and mitigate potential biases and risks. Results from these evaluations are published on public safety benchmarks.

The first Gemma release saw over 10 million downloads and inspired numerous projects, such as Navarasa’s AI model celebrating India’s linguistic diversity. With Gemma 2’s enhanced capabilities, Google anticipates even more ambitious and innovative projects from the developer community.

Gemma 2 is now available for testing in Google AI Studio, allowing users to experience its full capabilities without hardware constraints. The model weights can be downloaded from Kaggle and Hugging Face Models, with availability on Vertex AI Model Garden coming soon. To support research and development, Gemma 2 is offered free of charge on Kaggle or via a free tier for Colab notebooks. First-time Google Cloud customers may be eligible for $300 in credits, and academic researchers can apply for the Gemma 2 Academic Research Program to receive additional Google Cloud credits, with applications open until August 9.

Looking ahead, Google has announced plans to release a 2.6 billion parameter Gemma 2 model, designed to bridge the gap between lightweight accessibility and powerful performance. This upcoming model aims to further expand the range of options available to developers and researchers.

Gemma 2 is available in two sizes: 9 billion and 27 billion parameters, each with standard and instruction-tuned variants. The 9B model was trained on approximately 8 trillion tokens, while the 27B version was trained on about 13 trillion tokens of web data, code, and math. Both models feature a context length of 8,000 tokens. The instruction-tuned variants are denoted as “gemma-2-9b-it” and “gemma-2-27b-it,” while the base models are simply “gemma-2-9b” and “gemma-2-27b”.

These lightweight models are designed to run efficiently on various hardware, including Nvidia GPUs and Google’s TPUs, making them suitable for both cloud and on-device applications. This flexibility ensures that Gemma 2 can be integrated into a wide range of AI projects and workflows, from research environments to commercial applications.

The release of Gemma 2 represents a significant step forward in Google’s efforts to democratize AI technology. By providing powerful, efficient, and accessible models, Google aims to empower developers and researchers worldwide to tackle pressing challenges and drive innovation in artificial intelligence. As the AI landscape continues to evolve rapidly, open-source initiatives like Gemma 2 play a crucial role in fostering a diverse and collaborative ecosystem of AI development.

Copyright©dhaka.ai

tags: Artificial Intelligence, Ai, Dhaka Ai, Ai In Bangladesh, Ai In Dhaka, Google, Future of AI, Gemma 2, Artificial Intelligence in Bangladesh