The artificial intelligence arms race is heating up, with tech giants scrambling to stake their claim in the rapidly evolving generative AI landscape. Leading the charge is OpenAI’s ChatGPT, which took the world by storm late last year, captivating users with its ability to generate human-like text on virtually any topic. Not to be outdone, Meta (formerly Facebook) has unveiled its own AI assistant, Meta AI, powered by the company’s latest language model, Llama 3.

Meta’s move is a bold attempt to catch up with the likes of OpenAI and Google in the generative AI space. The company has integrated Meta AI into its core products, including Facebook, Instagram, WhatsApp, and Messenger, ensuring that its billions of users have seamless access to the AI assistant’s capabilities. This strategy mirrors Meta’s previous approach with features like Stories and Reels, which were initially pioneered by upstarts like Snapchat and TikTok before being incorporated into Meta’s apps, amplifying their reach and ubiquity.

The integration of Meta AI into the company’s various platforms presents both opportunities and challenges for Meta and its rivals. On the one hand, Meta’s vast user base provides an unparalleled platform for the rapid adoption of its AI assistant, potentially allowing it to leapfrog competitors in terms of user engagement and data collection. However, this ubiquity also raises concerns about privacy and the responsible use of AI, particularly given Meta’s checkered past with user data.

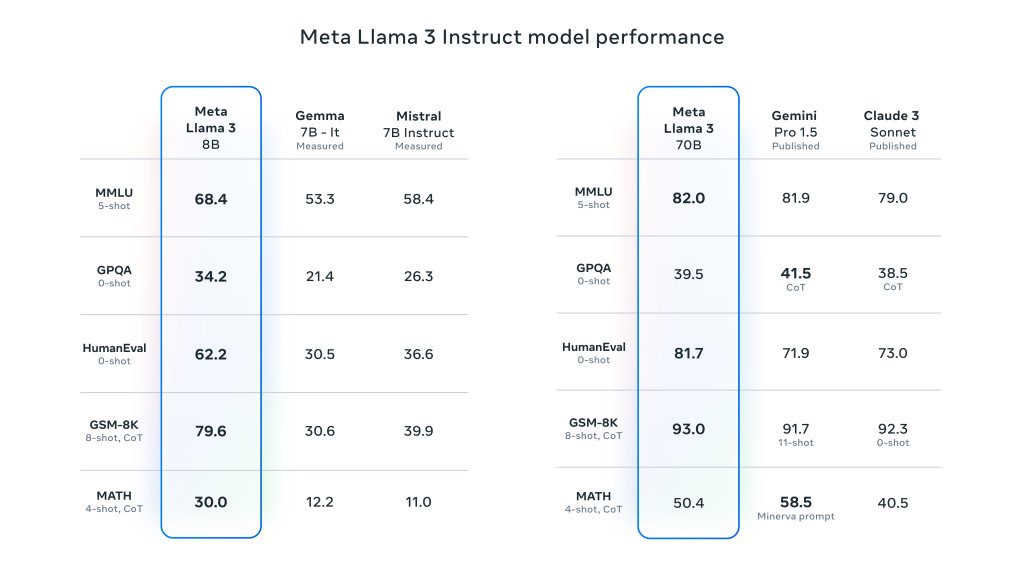

From a technical standpoint, Meta claims that Llama 3, the language model underpinning Meta AI, outperforms competing models in areas such as reasoning, coding, and creative writing. The company has also integrated real-time search results from Bing and Google into Meta AI, enhancing its ability to provide up-to-date and comprehensive information to users.

Nevertheless, rival companies like OpenAI and Google are not standing still. OpenAI is rumored to be working on GPT-5, a potential successor to the wildly successful GPT-4 model that powers ChatGPT. Google, meanwhile, has its own AI initiatives, including the Gemini chatbot and the recently paused Imagen image generator, which faced criticism for generating inaccurate depictions of historical figures.

One of the key challenges for all generative AI models is addressing issues related to bias, safety, and the potential for misuse. Meta has acknowledged this concern and claims to have reduced the rate of “false refusals” in Llama 3, where the model incorrectly declines to respond to harmless prompts. However, the company remains vague about the specifics of its training data and the measures taken to mitigate potential biases.

Another area of contention is the question of open-sourcing AI models. Meta has embraced an open-source approach with Llama 3, releasing smaller versions of the model to developers and expressing a willingness to open-source even the largest, 400-billion-parameter version. This strategy could be a double-edged sword: while it fosters innovation and collaboration within the AI community, it also raises concerns about the potential misuse of powerful AI models by bad actors.

Rival companies like OpenAI and Google have taken a more cautious approach, closely guarding their proprietary models and offering limited access to researchers and developers. This approach aims to maintain control over the technology and mitigate potential risks, but it also limits the pace of innovation and raises questions about transparency and accessibility.

The generative AI race presents a complex set of challenges and opportunities for all involved parties. On one hand, the rapid advancement of AI technology holds tremendous promise for enhancing human capabilities and tackling complex problems across industries. AI assistants like Meta AI and ChatGPT have already demonstrated their potential in areas like creative writing, coding, and task automation, offering glimpses of a future where human-AI collaboration becomes seamless and ubiquitous.

However, the breakneck pace of AI development also raises valid concerns about safety, ethics, and the potential for misuse. As these powerful models become more capable and widely available, the risks of generating harmful or biased content, perpetuating misinformation, or enabling malicious actors to exploit the technology for nefarious purposes become increasingly significant.

To address these challenges, it is believed that a balanced approach is necessary – one that fosters responsible innovation while prioritizing safety and ethical considerations. Companies like Meta, OpenAI, and Google must invest heavily in developing robust safeguards and transparent governance frameworks to ensure the responsible development and deployment of generative AI systems.

This could involve measures such as:

- Rigorous testing and auditing of AI models for bias, safety, and compliance with ethical guidelines.

- Implementing robust security measures to prevent unauthorized access and misuse of AI models.

- Developing clear guidelines and policies for the responsible use of AI assistants, with mechanisms for accountability and redress in case of misuse.

- Collaborating with policymakers, researchers, and civil society organizations to establish industry-wide standards and best practices for AI ethics and governance.

- Investing in public education and awareness campaigns to promote responsible AI adoption and address concerns about the technology’s impact on privacy, employment, and societal well-being.

Moreover, it also believed that companies should strike a balance between open-source and proprietary approaches to AI model development. While open-sourcing models can accelerate innovation and foster collaboration, it also carries risks of misuse. A hybrid approach, where core models are proprietary but certain components or smaller versions are open-sourced, could strike a balance between innovation and risk mitigation.

Ultimately, the generative AI race is not just a technological competition; it is a litmus test for the responsible development and adoption of transformative technologies. As AI capabilities continue to advance at a dizzying pace, it is incumbent upon tech companies, policymakers, and society at large to ensure that these powerful tools are harnessed for the greater good, while mitigating potential risks and addressing ethical concerns.

The road ahead is fraught with challenges, but the potential rewards of responsible AI adoption are immense – from unlocking new frontiers in scientific discovery and problem-solving to enhancing human productivity and creativity in ways we can scarcely imagine. By embracing a collaborative, ethical, and forward-thinking approach, we can chart a course towards a future where AI is a force for good, amplifying our collective potential while preserving the values that define our humanity.

Copyright©dhaka.ai

tags: Artificial Intelligence, Ai, Dhaka Ai, Ai In Bangladesh, Ai In Dhaka, OpenAI, ChatGPT, Meta, Google, Claude